Developers

API References

Data Subject Request API

Data Subject Request API Version 1 and 2

Data Subject Request API Version 3

Platform API

Key Management

Platform API Overview

Accounts

Apps

Audiences

Calculated Attributes

Data Points

Feeds

Field Transformations

Services

Users

Workspaces

Warehouse Sync API

Warehouse Sync API Overview

Warehouse Sync API Tutorial

Warehouse Sync API Reference

Data Mapping

Warehouse Sync SQL Reference

Warehouse Sync Troubleshooting Guide

ComposeID

Warehouse Sync API v2 Migration

Audit Logs API

Bulk Profile Deletion API Reference

Calculated Attributes Seeding API

Data Planning API

Group Identity API Reference

Custom Access Roles API

Pixel Service

Profile API

Events API

mParticle JSON Schema Reference

IDSync

Client SDKs

AMP

AMP SDK

Android

Initialization

Configuration

Network Security Configuration

Event Tracking

User Attributes

IDSync

Screen Events

Commerce Events

Location Tracking

Media

Kits

Application State and Session Management

Data Privacy Controls

Error Tracking

Opt Out

Push Notifications

WebView Integration

Logger

Preventing Blocked HTTP Traffic with CNAME

Workspace Switching

Linting Data Plans

Troubleshooting the Android SDK

API Reference

Upgrade to Version 5

Cordova

Cordova Plugin

Identity

Direct Url Routing

Direct URL Routing FAQ

Web

Android

iOS

iOS

Workspace Switching

Initialization

Configuration

Event Tracking

User Attributes

IDSync

Screen Tracking

Commerce Events

Location Tracking

Media

Kits

Application State and Session Management

Data Privacy Controls

Error Tracking

Opt Out

Push Notifications

Webview Integration

Upload Frequency

App Extensions

Preventing Blocked HTTP Traffic with CNAME

Linting Data Plans

Troubleshooting iOS SDK

Social Networks

iOS 14 Guide

iOS 15 FAQ

iOS 16 FAQ

iOS 17 FAQ

iOS 18 FAQ

API Reference

Upgrade to Version 7

React Native

Getting Started

Identity

Unity

Upload Frequency

Getting Started

Opt Out

Initialize the SDK

Event Tracking

Commerce Tracking

Error Tracking

Screen Tracking

Identity

Location Tracking

Session Management

Web

Initialization

Configuration

Content Security Policy

Event Tracking

User Attributes

IDSync

Page View Tracking

Commerce Events

Location Tracking

Media

Kits

Application State and Session Management

Data Privacy Controls

Error Tracking

Opt Out

Custom Logger

Persistence

Native Web Views

Self-Hosting

Multiple Instances

Web SDK via Google Tag Manager

Preventing Blocked HTTP Traffic with CNAME

Facebook Instant Articles

Troubleshooting the Web SDK

Browser Compatibility

Linting Data Plans

API Reference

Upgrade to Version 2 of the SDK

Xamarin

Getting Started

Identity

Alexa

Quickstart

Android

Overview

Step 1. Create an input

Step 2. Verify your input

Step 3. Set up your output

Step 4. Create a connection

Step 5. Verify your connection

Step 6. Track events

Step 7. Track user data

Step 8. Create a data plan

Step 9. Test your local app

iOS Quick Start

Overview

Step 1. Create an input

Step 2. Verify your input

Step 3. Set up your output

Step 4. Create a connection

Step 5. Verify your connection

Step 6. Track events

Step 7. Track user data

Step 8. Create a data plan

Python Quick Start

Step 1. Create an input

Step 2. Create an output

Step 3. Verify output

Server SDKs

Node SDK

Go SDK

Python SDK

Ruby SDK

Java SDK

Guides

Partners

Introduction

Outbound Integrations

Outbound Integrations

Firehose Java SDK

Inbound Integrations

Compose ID

Data Hosting Locations

Glossary

Migrate from Segment to mParticle

Migrate from Segment to mParticle

Migrate from Segment to Client-side mParticle

Migrate from Segment to Server-side mParticle

Segment-to-mParticle Migration Reference

Rules Developer Guide

API Credential Management

The Developer's Guided Journey to mParticle

Guides

Composable Audiences

Composable Audiences Overview

User Guide

User Guide Overview

Warehouse Setup

Warehouse Setup Overview

Audience Setup

Frequently Asked Questions

Customer 360

Overview

User Profiles

Overview

User Profiles

Group Identity

Overview

Create and Manage Group Definitions

Calculated Attributes

Calculated Attributes Overview

Using Calculated Attributes

Create with AI Assistance

Calculated Attributes Reference

Predictions

Predictions Overview

Predictions

View and Manage Predictions

New Predictions (Early Access)

What's Changed in the New Predictions UI

View and Manage Predictions

Predict Future Behavior

Future Behavior Predictions Overview

Create Future Behavior Prediction

Manage Future Behavior Predictions

Create an Audience with Future Behavior Predictions

Identity Dashboard (Early Access)

Getting Started

Create an Input

Start capturing data

Connect an Event Output

Create an Audience

Connect an Audience Output

Transform and Enhance Your Data

Platform Guide

Billing

Usage and Billing Report

The New mParticle Experience

The new mParticle Experience

The Overview Map

Platform Settings

Audit Logs

Key Management

Platform Configuration

Observability

Observability Overview

Observability User Guide

Observability Troubleshooting Examples

Observability Span Glossary

Event Forwarding

Event Match Quality Dashboard

Notifications

System Alerts

Trends

Introduction

Data Retention

Data Catalog

Connections

Activity

Data Plans

Live Stream

Filters

Rules

Blocked Data Backfill Guide

Tiered Events

mParticle Users and Roles

Analytics Free Trial

Troubleshooting mParticle

Usage metering for value-based pricing (VBP)

IDSync

IDSync Overview

Use Cases for IDSync

Components of IDSync

Store and Organize User Data

Identify Users

Default IDSync Configuration

Profile Conversion Strategy

Profile Link Strategy

Profile Isolation Strategy

Best Match Strategy

Aliasing

Segmentation

Audiences

Audiences Overview

Create an Audience

Connect an Audience

Manage Audiences

Audience Sharing

Audience Expansion

Match Boost

FAQ

Classic Audiences

Standard Audiences (Legacy)

Predictive Audiences

Predictive Audiences Overview

Using Predictive Audiences

New vs. Classic Experience Comparison

Analytics

Introduction

Core Analytics (Beta)

Setup

Sync and Activate Analytics User Segments in mParticle

User Segment Activation

Welcome Page Announcements

Settings

Project Settings

Roles and Teammates

Organization Settings

Global Project Filters

Portfolio Analytics

Analytics Data Manager

Analytics Data Manager Overview

Events

Event Properties

User Properties

Revenue Mapping

Export Data

UTM Guide

Analyses

Analyses Introduction

Segmentation: Basics

Getting Started

Visualization Options

For Clauses

Date Range and Time Settings

Calculator

Numerical Settings

Segmentation: Advanced

Assisted Analysis

Properties Explorer

Frequency in Segmentation

Trends in Segmentation

Did [not] Perform Clauses

Cumulative vs. Non-Cumulative Analysis in Segmentation

Total Count of vs. Users Who Performed

Save Your Segmentation Analysis

Export Results in Segmentation

Explore Users from Segmentation

Funnels: Basics

Getting Started with Funnels

Group By Settings

Conversion Window

Tracking Properties

Date Range and Time Settings

Visualization Options

Interpreting a Funnel Analysis

Funnels: Advanced

Group By

Filters

Conversion over Time

Conversion Order

Trends

Funnel Direction

Multi-path Funnels

Analyze as Cohort from Funnel

Save a Funnel Analysis

Explore Users from a Funnel

Export Results from a Funnel

Saved Analyses

Manage Analyses in Dashboards

Query Builder

Data Dictionary

Query Builder Overview

Modify Filters With And/Or Clauses

Query-time Sampling

Query Notes

Filter Where Clauses

Event vs. User Properties

Group By Clauses

Annotations

Cross-tool Compatibility

Apply All for Filter Where Clauses

Date Range and Time Settings Overview

User Attributes at Event Time

Understanding the Screen View Event

User Aliasing

Dashboards

Dashboards––Getting Started

Manage Dashboards

Dashboard Filters

Organize Dashboards

Scheduled Reports

Favorites

Time and Interval Settings in Dashboards

Query Notes in Dashboards

Analytics Resources

The Demo Environment

Keyboard Shortcuts

User Segments

Data Privacy Controls

Data Subject Requests

Default Service Limits

Feeds

Cross-Account Audience Sharing

Import Data with CSV Files

Import Data with CSV Files

CSV File Reference

Glossary

Video Index

Analytics (Deprecated)

Identity Providers

Single Sign-On (SSO)

Setup Examples

Introduction

Developer Docs

Introduction

Integrations

Introduction

Rudderstack

Google Tag Manager

Segment

Data Warehouses and Data Lakes

AWS Kinesis (Snowplow)

Advanced Data Warehouse Settings

AWS Redshift (Define Your Own Schema)

AWS S3 Integration (Define Your Own Schema)

AWS S3 (Snowplow Schema)

BigQuery (Snowplow Schema)

BigQuery Firebase Schema

BigQuery (Define Your Own Schema)

GCP BigQuery Export

Snowflake (Snowplow Schema)

Snowplow Schema Overview

Snowflake (Define Your Own Schema)

Developer Basics

Aliasing

Integrations

ABTasty

Audience

24i

Event

Aarki

Audience

Actable

Feed

AdChemix

Event

AdMedia

Audience

Adobe Marketing Cloud

Cookie Sync

Platform SDK Events

Server-to-Server Events

Adobe Audience Manager

Audience

Adobe Campaign Manager

Audience

Adobe Experience Platform

Event

Adobe Target

Audience

AdPredictive

Feed

AgilOne

Event

Algolia

Event

Amazon Advertising

Audience

Amazon Kinesis

Event

Amazon Redshift

Data Warehouse

Amazon S3

Event

Amazon SNS

Event

Amobee

Audience

Amazon SQS

Event

Anodot

Event

Apptentive

Event

Antavo

Feed

Apteligent

Event

Apptimize

Event

Awin

Event

Microsoft Azure Blob Storage

Event

Bidease

Audience

Bluecore

Event

Bing Ads

Event

Bluedot

Feed

Branch S2S Event

Event

Bugsnag

Event

Cadent

Audience

Census

Feed

comScore

Event

Conversant

Event

Crossing Minds

Event

Custom Feed

Custom Feed

Databricks

Data Warehouse

Datadog

Event

Didomi

Event

Eagle Eye

Audience

Edge226

Audience

Epsilon

Event

Emarsys

Audience

Facebook Offline Conversions

Event

Everflow

Audience

Google Analytics for Firebase

Event

Flurry

Event

Flybits

Event

ForeSee

Event

FreeWheel Data Suite

Audience

Google Ad Manager

Audience

Friendbuy

Event

Google Analytics

Event

Google Analytics 4

Event

Google BigQuery

Audience

Data Warehouse

Google Marketing Platform

Audience

Event

Cookie Sync

Google Enhanced Conversions

Event

Google Marketing Platform Offline Conversions

Event

Heap

Event

Google Tag Manager

Event

Google Pub/Sub

Event

Hightouch

Feed

Herow

Feed

Hyperlocology

Event

ID5

Kit

Ibotta

Event

Impact

Event

InMarket

Audience

Inspectlet

Event

Intercom

Event

ironSource

Audience

Kafka

Event

Kissmetrics

Event

Kubit

Event

LaunchDarkly

Feed

LifeStreet

Audience

LiveLike

Event

MadHive

Audience

Liveramp

Audience

Localytics

Event

Marigold

Audience

MediaMath

Audience

Microsoft Ads

Microsoft Ads Audience Integration

Mediasmart

Audience

Microsoft Azure Event Hubs

Event

Mintegral

Audience

mAdme Technologies

Event

Monetate

Event

Movable Ink

Event

Movable Ink - V2

Event

Multiplied

Event

Nami ML

Feed

Nanigans

Event

NCR Aloha

Event

OneTrust

Event

Neura

Event

Oracle BlueKai

Event

Paytronix

Feed

Personify XP

Event

Persona.ly

Audience

Plarin

Event

Primer

Event

Quantcast

Event

Qualtrics

Event

Regal

Event

Rakuten

Event

Reveal Mobile

Event

RevenueCat

Feed

Salesforce Mobile Push

Event

Scalarr

Event

Shopify

Feed

Custom Pixel

SimpleReach

Event

Singular-DEPRECATED

Event

Skyhook

Event

Smadex

Audience

Slack

Event

SmarterHQ

Event

Snapchat Conversions

Event

Snowflake

Data Warehouse

Snowplow

Event

StartApp

Audience

Splunk MINT

Event

Talon.One

Audience

Feed

Event

Loyalty Feed

Tapjoy

Audience

Taptica

Audience

Tapad

Audience

Taplytics

Event

The Trade Desk

Audience

Cookie Sync

Event

Ticketure

Feed

Teak

Audience

Triton Digital

Audience

TUNE

Event

Valid

Event

Vkontakte

Audience

Vungle

Audience

Webhook

Event

Webtrends

Event

White Label Loyalty

Event

Wootric

Event

Xandr

Audience

Cookie Sync

Yahoo (formerly Verizon Media)

Cookie Sync

Audience

Yotpo

Feed

YouAppi

Audience

Import Data with CSV Files

You can import bulk data, both user attribute and events, from your data warehouse or legacy system using comma separated values (CSV) files. You can use this technique in all regions and with all outputs.

Use the following process to load user attribute or event data from CSV files:

- 2. Get credentials for the mParticle SFTP server

- 3. Configure the Custom CSV Feed

- 4. Drop CSV files on the SFTP server

1. Create CSV files

Prepare the CSV files for import. Files must follow the guidelines in this section.

File guidelines

- Files must adhere to the RFC4180 standards for CSV formatting.

-

Files must be sent in one of the following formats:

- A plain CSV (

.csv) - A ZIP file containing one or more CSV files (

.zip) - A gzipped CSV (

.csv.gz). - A PGP/GPG-encrypted file with the additional extension

.gpgappended, for example,.csv.gpgor.csv.gz.gpg). Only encrypted OR unencrypted files can be accepted, but not both. You must use PGP encryption with mParticle’s public key. See Encrypted files for additional instructions.

- A plain CSV (

- File sizes should be between 5 MB and 2 GB. If you upload files outside these limits, processing speed is slower. If possible, split the data across multiple small files, because their processing can be parallelized.

- Each file can only contain events of the same event type. You can’t mix events of different types in the same file.

- Don’t use subfolders.

- Each row size should be under 80 KB. Larger rows may impact performance.

- All column names must be unique.

- Each CSV file must contain fewer than 50 columns.

-

File name requirements:

- Do not use any dashes ( - ) or dots ( . ) in your file name, other than what is described below.

- End the file name based on the event content in your file:

-custom_event.csv-commerce_event.csv-screen_view.csv-eventless.csvfor eventless uploads of user identities and attributes

-

Column names: specify fields according to our JSON Schema, using dot notation.

- Column names described are case sensitive.

Data guidelines

- Environment: include a column name

environmentset todevelopmentorproduction. If anenvironmentcolumn is not included, data is ingested into the production environment. -

User and Device IDs: as with any data sent to mParticle, you must include a column with at least one user ID or device ID.

Device IDs

device_info.android_advertising_iddevice_info.android_uuiddevice_info.ios_advertising_iddevice_info.att_authorization_statusdevice_info.ios_idfvdevice_info.roku_advertising_iddevice_info.roku_publisher_iddevice_info.fire_advertising_iddevice_info.microsoft_advertising_id

User IDs

mpiduser_identities.customeriduser_identities.emailuser_identities.facebookuser_identities.microsoftuser_identities.twitteruser_identities.yahoouser_identities.otheruser_identities.other2user_identities.other3user_identities.other4

-

User attributes:

If you include user attributes, for each, include a column named as

user_attributes.key, where key is a user attribute key. For example:user_attributes.$FirstNameuser_attributes.communication_preferenceuser_attributes.Member Tier

Attribute names with spaces are allowed and do not require quotes. All the keys listed in the JSON Reference are supported.

-

Events:

- Use a column named

events.data.timestamp_unixtime_msto set the event time. - Use a column named

events.data.custom_attributes.key, where key is an event attribute key, to set custom event attributes.

Attribute names with spaces are allowed and do not require quotes. All the keys listed in the JSON Reference are supported. - Screen view events: use a column named

events.data.screen_nameif you want to include the screen name. - Custom events: use columns named

events.data.event_nameandevents.data.custom_event_typeto include custom events. -

Commerce events: use columns with the following names for commerce events.

events.data.product_action.actionevents.data.product_action.products.idevents.data.product_action.products.nameevents.data.product_action.products.categoryevents.data.product_action.products.brandevents.data.product_action.products.variantevents.data.product_action.products.positionevents.data.product_action.products.priceevents.data.product_action.products.quantityevents.data.product_action.products.coupon_codeevents.data.product_action.products.added_to_cart_time_msevents.data.product_action.products.total_product_amountevents.data.product_action.products.custom_attributes

Only one product per event can be included for commerce events uploaded via CSV.

- Use a column named

-

Data types:

All data in the CSV is converted to a string. The only exceptions to this are values that require a particular data type, such as MPID or IDFA.

- Only standard custom events and screen views, and eventless batches (eventless drops of user identity and attributes), have been tested.

- Attributes sent as arrays are not fully supported. When the entire array is present in a single cell of the CSV file, it is supported and is converted to string. Because there is no way of specifying anything but the first item in an array, repeated header columns, each subsequent column overwrites the previous one. Multiple columns don’t append to the array. This is why you can only include one product for ecommerce events. Commerce events in the Events API support arrays in multiple places, but with CSV files, you can only populate a single item in each of these arrays.

- Custom manifest: You can use a custom manifest to drop files created in another system without transforming them. For details, see Use a custom manifest.

2. Get credentials for the mParticle SFTP server

mParticle maintains an SFTP server where you will drop your CSV files. Use the following instructions to securely retrieve your credentials and find the hostname and path to use when you drop your files on the SFTP server.

To get your SFTP username and password:

- Sign up for a Keybase account with your work email at https://keybase.io/. Keybase is a secure tool which includes end-to-end encrypted chat.

- Provide your Keybase account name to your Customer Success Manager or your mParticle Solutions Consultant so that they can pass it on to our Ops team.

- Expect to receive your SFTP access credentials in a Keybase chat from mParticle. Note that if you need to use credentials that you already have, you’ll share those credentials in the Keybase chat.

3. Configure the Custom CSV Feed

Configure the Custom CSV Feed as input. This step provides the hostname and folder path on the SFTP server where your CSV files must be dropped.

To configure the Custom CSV Feed:

- Go to Data Platform > Setup > Inputs using the left hand nav and select the Feeds tab.

-

Using the Add Feed Input + dropdown menu, search for and select Custom CSV Feed.

- If you’ve already added the Custom CSV Feed, it won’t show up in the list. Scroll through the list of feeds until you see Custom CSV Feed, and then click the large plus sign in the gray bar to create a new feed. You need one feed for each different event type.

-

Enter the following values:

- Configuration Name: enter a name that makes this feed easy to recognize in your list of feeds.

- Custom Event Name: if you are importing a custom event, enter the name that will be used for the custom event.

- Custom Event Type: if you entered a custom event name, select the event type.

- Custom Manifest: if you are using a custom manifest, paste it in the text box provided.

- Expect Encrypted Files: if you will import a PGP/GPG-encrypted file, select this option.

-

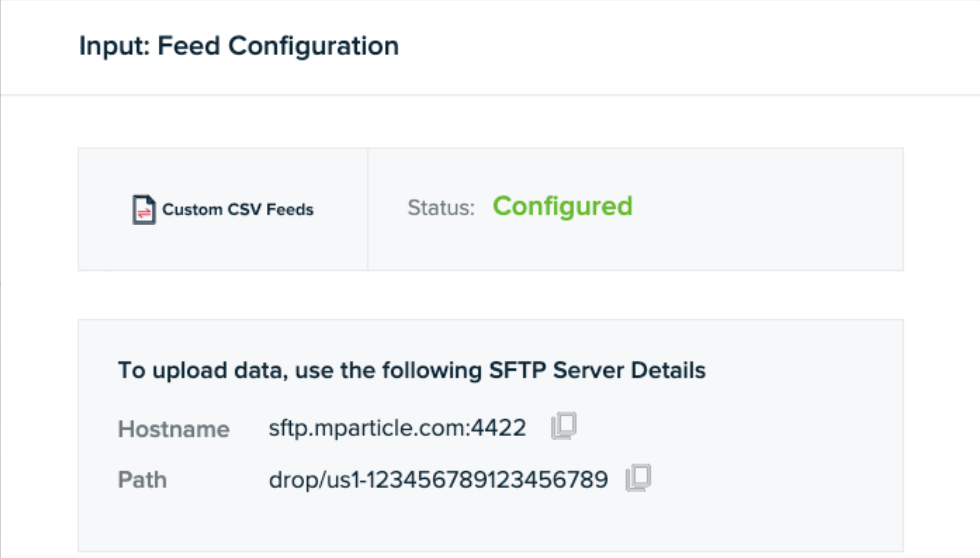

After you complete the connection configuration, click Issue SFTP Details. mParticle displays your hostname and path for mParticle’s SFTP server.

4. Drop CSV files on the SFTP server

- Connect to the mParticle SFTP server using the credentials provided. Once you have connected, the mParticle creates the

dropfolder. If you don’t see one, create a folder nameddrop. - Create a new folder inside the

dropfolder, and name it using the pathname provided in the mParticle UI as shown in the previous section. For example, based on the previous example, the folder path and name issftp.mparticle.com:4422:drop/us1-123456789123456789/. Hint: Verify that there are no trailing spaces in the name. - Use your credentials to upload your CSV files to mParticle’s SFTP server, using the correct path and folder name from the previous step.

Files on the SFTP location are added to the processing queue nearly immediately. Depending on file count and file size, a backlog may develop. You can observe how much data has been processed using Data Master and your outbound connections. There is no notification of processing progress or completion.

Was this page helpful?

- Last Updated: February 18, 2026