Developers

API References

Data Subject Request API

Data Subject Request API Version 1 and 2

Data Subject Request API Version 3

Platform API

Key Management

Platform API Overview

Accounts

Apps

Audiences

Calculated Attributes

Data Points

Feeds

Field Transformations

Services

Users

Workspaces

Warehouse Sync API

Warehouse Sync API Overview

Warehouse Sync API Tutorial

Warehouse Sync API Reference

Data Mapping

Warehouse Sync SQL Reference

Warehouse Sync Troubleshooting Guide

ComposeID

Warehouse Sync API v2 Migration

Bulk Profile Deletion API Reference

Calculated Attributes Seeding API

Custom Access Roles API

Data Planning API

Group Identity API Reference

Pixel Service

Profile API

Events API

mParticle JSON Schema Reference

IDSync

Client SDKs

AMP

AMP SDK

Android

Initialization

Configuration

Network Security Configuration

Event Tracking

User Attributes

IDSync

Screen Events

Commerce Events

Location Tracking

Media

Kits

Application State and Session Management

Data Privacy Controls

Error Tracking

Opt Out

Push Notifications

WebView Integration

Logger

Preventing Blocked HTTP Traffic with CNAME

Linting Data Plans

Troubleshooting the Android SDK

API Reference

Upgrade to Version 5

Cordova

Cordova Plugin

Identity

Direct Url Routing

Direct URL Routing FAQ

Web

Android

iOS

iOS

Workspace Switching

Initialization

Configuration

Event Tracking

User Attributes

IDSync

Screen Tracking

Commerce Events

Location Tracking

Media

Kits

Application State and Session Management

Data Privacy Controls

Error Tracking

Opt Out

Push Notifications

Webview Integration

Upload Frequency

App Extensions

Preventing Blocked HTTP Traffic with CNAME

Linting Data Plans

Troubleshooting iOS SDK

Social Networks

iOS 14 Guide

iOS 15 FAQ

iOS 16 FAQ

iOS 17 FAQ

iOS 18 FAQ

API Reference

Upgrade to Version 7

React Native

Getting Started

Identity

Unity

Upload Frequency

Getting Started

Opt Out

Initialize the SDK

Event Tracking

Commerce Tracking

Error Tracking

Screen Tracking

Identity

Location Tracking

Session Management

Web

Initialization

Configuration

Content Security Policy

Event Tracking

User Attributes

IDSync

Page View Tracking

Commerce Events

Location Tracking

Media

Kits

Application State and Session Management

Data Privacy Controls

Error Tracking

Opt Out

Custom Logger

Persistence

Native Web Views

Self-Hosting

Multiple Instances

Web SDK via Google Tag Manager

Preventing Blocked HTTP Traffic with CNAME

Facebook Instant Articles

Troubleshooting the Web SDK

Browser Compatibility

Linting Data Plans

API Reference

Upgrade to Version 2 of the SDK

Xamarin

Getting Started

Identity

Alexa

Server SDKs

Node SDK

Go SDK

Python SDK

Ruby SDK

Java SDK

Quickstart

Android

Overview

Step 1. Create an input

Step 2. Verify your input

Step 3. Set up your output

Step 4. Create a connection

Step 5. Verify your connection

Step 6. Track events

Step 7. Track user data

Step 8. Create a data plan

Step 9. Test your local app

iOS Quick Start

Overview

Step 1. Create an input

Step 2. Verify your input

Step 3. Set up your output

Step 4. Create a connection

Step 5. Verify your connection

Step 6. Track events

Step 7. Track user data

Step 8. Create a data plan

Python Quick Start

Step 1. Create an input

Step 2. Create an output

Step 3. Verify output

Guides

Partners

Introduction

Outbound Integrations

Outbound Integrations

Firehose Java SDK

Inbound Integrations

Compose ID

Data Hosting Locations

Glossary

Rules Developer Guide

API Credential Management

The Developer's Guided Journey to mParticle

Guides

Customer 360

Overview

User Profiles

Overview

User Profiles

Group Identity

Overview

Create and Manage Group Definitions

Calculated Attributes

Calculated Attributes Overview

Using Calculated Attributes

Create with AI Assistance

Calculated Attributes Reference

Predictive Attributes

What are predictive attributes?

Getting Started

Create an Input

Start capturing data

Connect an Event Output

Create an Audience

Connect an Audience Output

Transform and Enhance Your Data

Segmentation

New Audiences Experience

Audiences Overview

Create an Audience

Connect an Audience

Manage Audiences

FAQ

Classic Audiences Experience

Real-time Audiences (Legacy)

Standard Audiences (Legacy)

New vs. Classic Experience Comparison

Predictive Audiences

Predictive Audiences Overview

Using Predictive Audiences

Platform Guide

Billing

Usage and Billing Report

The New mParticle Experience

The new mParticle Experience

The Overview Map

Observability

Observability Overview

Observability User Guide

Observability Troubleshooting Examples

Observability Span Glossary

Platform Settings

Key Management

Event Forwarding

Notification Center (Early Access)

System Alerts

Trends

Introduction

Data Retention

Data Catalog

Connections

Activity

Data Plans

Live Stream

Filters

Rules

Blocked Data Backfill Guide

Tiered Events

mParticle Users and Roles

Analytics Free Trial

Troubleshooting mParticle

Usage metering for value-based pricing (VBP)

IDSync

IDSync Overview

Use Cases for IDSync

Components of IDSync

Store and Organize User Data

Identify Users

Default IDSync Configuration

Profile Conversion Strategy

Profile Link Strategy

Profile Isolation Strategy

Best Match Strategy

Aliasing

Analytics

Introduction

Core Analytics (Beta)

Setup

Sync and Activate Analytics User Segments in mParticle

User Segment Activation

Welcome Page Announcements

Settings

Project Settings

Roles and Teammates

Organization Settings

Global Project Filters

Portfolio Analytics

Analytics Data Manager

Analytics Data Manager Overview

Events

Event Properties

User Properties

Revenue Mapping

Export Data

UTM Guide

Analyses

Analyses Introduction

Segmentation: Basics

Getting Started

Visualization Options

For Clauses

Date Range and Time Settings

Calculator

Numerical Settings

Segmentation: Advanced

Assisted Analysis

Properties Explorer

Frequency in Segmentation

Trends in Segmentation

Did [not] Perform Clauses

Cumulative vs. Non-Cumulative Analysis in Segmentation

Total Count of vs. Users Who Performed

Save Your Segmentation Analysis

Export Results in Segmentation

Explore Users from Segmentation

Funnels: Basics

Getting Started with Funnels

Group By Settings

Conversion Window

Tracking Properties

Date Range and Time Settings

Visualization Options

Interpreting a Funnel Analysis

Funnels: Advanced

Group By

Filters

Conversion over Time

Conversion Order

Trends

Funnel Direction

Multi-path Funnels

Analyze as Cohort from Funnel

Save a Funnel Analysis

Explore Users from a Funnel

Export Results from a Funnel

Saved Analyses

Manage Analyses in Dashboards

Query Builder

Data Dictionary

Query Builder Overview

Modify Filters With And/Or Clauses

Query-time Sampling

Query Notes

Filter Where Clauses

Event vs. User Properties

Group By Clauses

Annotations

Cross-tool Compatibility

Apply All for Filter Where Clauses

Date Range and Time Settings Overview

User Attributes at Event Time

Understanding the Screen View Event

User Aliasing

Dashboards

Dashboards––Getting Started

Manage Dashboards

Dashboard Filters

Organize Dashboards

Scheduled Reports

Favorites

Time and Interval Settings in Dashboards

Query Notes in Dashboards

Analytics Resources

The Demo Environment

Keyboard Shortcuts

User Segments

Warehouse Sync

Data Privacy Controls

Data Subject Requests

Default Service Limits

Feeds

Cross-Account Audience Sharing

Approved Sub-Processors

Import Data with CSV Files

Import Data with CSV Files

CSV File Reference

Glossary

Video Index

Analytics (Deprecated)

Identity Providers

Single Sign-On (SSO)

Setup Examples

Introduction

Developer Docs

Introduction

Integrations

Introduction

Rudderstack

Google Tag Manager

Segment

Data Warehouses and Data Lakes

Advanced Data Warehouse Settings

AWS Kinesis (Snowplow)

AWS Redshift (Define Your Own Schema)

AWS S3 Integration (Define Your Own Schema)

AWS S3 (Snowplow Schema)

BigQuery (Snowplow Schema)

BigQuery Firebase Schema

BigQuery (Define Your Own Schema)

GCP BigQuery Export

Snowflake (Snowplow Schema)

Snowplow Schema Overview

Snowflake (Define Your Own Schema)

Developer Basics

Aliasing

Integrations

24i

Event

Aarki

Audience

Abakus

Event

Actable

Feed

ABTasty

Audience

AdChemix

Event

AdMedia

Audience

Adobe Audience Manager

Audience

Adobe Marketing Cloud

Cookie Sync

Server-to-Server Events

Platform SDK Events

Adobe Target

Audience

Adobe Campaign Manager

Audience

AdPredictive

Feed

AgilOne

Event

Algolia

Event

Amazon Advertising

Audience

Alooma

Event

Amazon Kinesis

Event

Amazon Redshift

Data Warehouse

Amazon S3

Event

Amazon SNS

Event

Amobee

Audience

Amazon SQS

Event

Anodot

Event

Antavo

Feed

Apptentive

Event

Apptimize

Event

Apteligent

Event

Attractor

Event

Microsoft Azure Blob Storage

Event

Awin

Event

Bidease

Audience

Bing Ads

Event

Bluecore

Event

Bluedot

Feed

Branch S2S Event

Event

Bugsnag

Event

Cadent

Audience

Census

Feed

Conversant

Event

comScore

Event

Crossing Minds

Event

Custom Feed

Custom Feed

Datadog

Event

Databricks

Data Warehouse

Didomi

Event

Dynalyst

Audience

Edge226

Audience

Emarsys

Audience

Epsilon

Event

Everflow

Audience

Facebook Offline Conversions

Event

Google Analytics for Firebase

Event

Flurry

Event

Flybits

Event

ForeSee

Event

FreeWheel Data Suite

Audience

Friendbuy

Event

Google Ad Manager

Audience

Google Analytics

Event

Google Analytics 4

Event

Google BigQuery

Audience

Data Warehouse

Google Enhanced Conversions

Event

Google Marketing Platform

Cookie Sync

Audience

Event

Google Marketing Platform Offline Conversions

Event

Google Pub/Sub

Event

Google Tag Manager

Event

Heap

Event

Herow

Feed

Hightouch

Feed

Hyperlocology

Event

Impact

Event

Ibotta

Event

InMarket

Audience

ID5

Kit

Intercom

Event

Inspectlet

Event

ironSource

Audience

Kafka

Event

Kissmetrics

Event

Kubit

Event

LaunchDarkly

Feed

LifeStreet

Audience

LiveLike

Event

Liveramp

Audience

Localytics

Event

MadHive

Audience

mAdme Technologies

Event

Marigold

Audience

Mediasmart

Audience

MediaMath

Audience

Microsoft Azure Event Hubs

Event

Mintegral

Audience

Monetate

Event

Movable Ink - V2

Event

Movable Ink

Event

Multiplied

Event

Nami ML

Feed

Nanigans

Event

NCR Aloha

Event

Neura

Event

OneTrust

Event

Oracle BlueKai

Event

Paytronix

Feed

Persona.ly

Audience

Personify XP

Event

Plarin

Event

Quadratic Labs

Event

Qualtrics

Event

Quantcast

Event

Rakuten

Event

Regal

Event

Reveal Mobile

Event

RevenueCat

Feed

Salesforce Mobile Push

Event

Salesforce Sales and Service Cloud

Event

Scalarr

Event

Shopify

Feed

Custom Pixel

Signal

Event

SimpleReach

Event

Singular-DEPRECATED

Event

Skyhook

Event

Slack

Event

Smadex

Audience

SmarterHQ

Event

Snowflake

Data Warehouse

Snapchat Conversions

Event

Snowplow

Event

Splunk MINT

Event

StartApp

Audience

Talon.One

Audience

Event

Feed

Loyalty Feed

Tapad

Audience

Tapjoy

Audience

Taptica

Audience

Taplytics

Event

Teak

Audience

The Trade Desk

Cookie Sync

Audience

Event

Ticketure

Feed

TUNE

Event

Triton Digital

Audience

Valid

Event

Vkontakte

Audience

Vungle

Audience

Webhook

Event

Webtrends

Event

White Label Loyalty

Event

Wootric

Event

Xandr

Audience

Cookie Sync

Yahoo (formerly Verizon Media)

Cookie Sync

Audience

Yotpo

Feed

YouAppi

Audience

Primer

Event

mParticle Command Line Interface

Overview

The mParticle Command Line Interface (CLI) can be used to communicate with various mParticle services and functions through simple terminal commands.

Through the CLI, an engineer can directly interface with many of mParticle’s services without needing to make requests directly, (such as commands via cUrl or Postman). Also, many of these requests can be integrated in any Continuous Integration/Continuous Deployment (CI/CD) systems.

Installation

The CLI installs as a simple npm package. Simply install globally and then type mp once installed.

$ npm install -g @mparticle/cli

$ mp [COMMAND]

running command...

$ mp (-v|--version|version)

@mparticle/cli/1.X.X darwin-x64 node-v10.XX.X

$ mp --help [COMMAND]

USAGE

$ mp COMMANDVerifying installation

To verify your installation and version, use the mp --version

$ mp --version

@mparticle/cli/1.X.X darwin-x64 node-v10.XX.XGetting Started

Simply use mp help to view a list of the available commands.

$ mp help

mParticle Command Line Interface

VERSION

@mparticle/cli/1.X.X darwin-x64 node-v10.XX.X

USAGE

$ mp [COMMAND]

COMMANDS

autocomplete display autocomplete installation instructions

help display help for mp

planning Manages Data PlanningSetting up autocomplete

As a convenience, we provide a simple autocomplete feature, where you can type in part of a command, then press <TAB> to autocomplete a command.

Simply type mp autocomplete for instructions on configuring this feature.

Staying up to date

Simply use npm install -g @mparticle/cli to upgrade to the latest version.

Configuration

To perform commands on the CLI, you pass in flags such as authentication credentials or record identifiers. Some of these parameters can be added to an optional configuration file, mp.config.json, to be shared between commands or other mParticle applications.

The CLI will automatically search in the current working directory for a valid json filed named mp.config.json.

Alternatively, a json file can be passed in with the --config=/path/to/config flag.

For example, if you need to store configs for multiple projects, you could store them in a central location and pass in either a relative or absolute path to the cli:

$> mp planning:data-plan-versions:fetch --config=~/.myconfigs/custom.config.jsonIt is recommended to have a single mp.config.json file at the root of your project and always run the CLI from the root. If you are using our data planning linters, you must name your file mp.config.json and keep it at the root of your folder.

Example mp.config.json file

{

"global": {

"workspaceId": "XXXXX",

"clientId": "XXXXXX",

"clientSecret": "XXXXXXXXX"

},

"planningConfig": {

"dataPlanVersionFile": "./path/to/dataPlanVersionFile.json"

}

}global

This contains settings that would pertain to your account credentials and application.

workspaceId: The workspace identifier for your team’s workspaceclientId: A unique Client Identification string provided by your Customer Success ManagerclientSecret: A secret key provided by your Customer Success Manager

It is recommended that you always have these three credentials in your configuration as they are used by other Platform API services, such as Data Planning

planningConfig

These are configurations pertaining to your project’s Data Master resources, such as data plans and data plan versions. planningConfig is required if you use our data plan linting tools, which you can learn more about here. Note that from the UI under Data Master/Plans, the json you download is a specific data plan version.

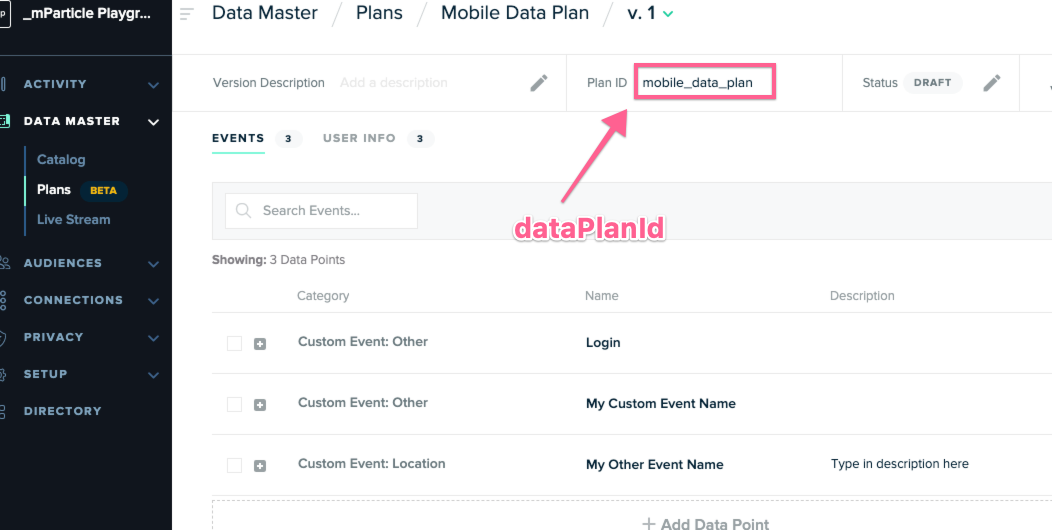

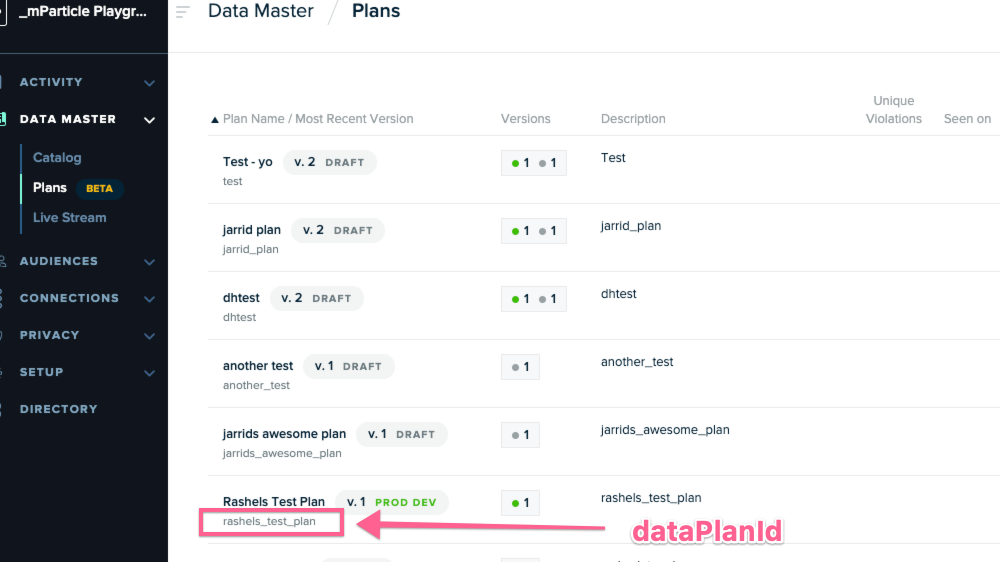

dataPlanVersionFile: A relative or absolute path file to your desired data plan version (used in place ofdataPlanFileandversionNumber)dataPlanId: The ID of your current Data PlandataPlanFile: A relative or absolute path to your data plan file (must be used withversionNumberbelow)versionNumber: The Current Version Number for your Data Plan (must be used withdataPlanFile)

Workflow

At its core, the CLI exposes services in a manner that is consistent with our REST APIs. Each command will offer a unique set of sub commands, as well as arguments and flags.

The CLI also provides universal command flags for global functions, such as --help or --outfile.

The CLI command structure is as follows:

mp [COMMAND]:[SUBCOMMAND]:[subcommand] --[flag]=[value][args...]By default, every command will output to the CLI’s standard out. By adding a flag of --outFile=/path, the user can output the response to a log file (or json file) depending on the use case.

The CLI provides a --help flag which reveals all acceptable parameters and flags for a command, as well as a list of commands. Furthermore, top level commands will reveal their respective sub commands upon execution.

Authentication

Any CLI command that requires any mParticle HTTP API resources allows two options for authentication. You can pass credentials via either 1. command line or 2. an mp.config.json file in the root of your project.

Both of these methods will internally generate a bearer token on your behalf, as describe in Platform API Authentication.

Credentials Required:

- Workspace ID: Managing Workspace

- Client ID & Client Secret: In Managing Workspace, after you click on the specific workspace, there will be a pop-up with a Key and Secret. Fill this in as your Client ID and Client Secret.

via CLI

Simply pass your authentication credentials via the following CLI flags:

$ mp [COMMAND]:[SUBCOMMAND] --workspaceID=XXXX --clientId=XXXXX --clientSecret=XXXXXXvia Configuration File

To integrate with various services, we recommend adding an mp.config.json file to the root of your project. This will allow you to set various properties related to your mParticle account as well as other project settings, such as your data plan directory.

For more information on mp.config.json.

For example, to authenticate, make sure the following is in your mp.config.json file:

// mp.config.json

{

"global": {

"workspaceId": "XXXXX",

"clientId": "XXXXXX",

"clientSecret": "XXXXXXXXX"

}

}This configuration file can then be referenced via the cli flag --config. Additionally, the cli will search your current working directory for mp.config.json.

Services

Data Planning

The CLI exposes commands to allow for Creating, Fetching, Updating, and Deleting data plans, as well as validating your events against a downloaded Data Plan.

Please be aware that all of these services require Platform API authentication credentials via mp.config.json or via CLI arguments: clientId, clientSecret and workspaceId as well as Data Planning access.

Fetching Data Plans and Data Plan Versions

Fetching a Data Plan requires that a data plan exists on the server. Simply pass the dataPlanId as a flag to fetch this resource.

The Resource must exist on the server, otherwise this will fail.

$ mp planning:data-plans:fetch --dataPlanId=XXXXXXTo fetch a Data Plan Version, simply use mp planning:data-plan-versions:fetch and pass a dataPlanId and versionNumber.

Creating a Data Plan and Data Plan Versions

Use the following command to create a Data Plan Resource (or Data Plan Version) on the server.

$ mp planning:data-plans:create --dataPlan="{ // Data plan as string //}"You can also replace dataPlan with dataPlanFile to use a path to a locally stored data plan if that is more convenient.

For example:

$ mp planning:data-plans:create --dataPlanFile=/path/to/dataplan/file.jsonTo create a Data Plan Version, simply use mp planning:data-plan-versions:create and pass a dataPlanId as a secondary flag.

Editing a Data Plan and Data Plan Versions

To edit an existing Data Plan (or Data Plan Version) on the server, use the following:

$ mp planning:data-plans:update --dataPlanId=XXXX --dataPlan="{ // Data plan as string //}"You can also replace dataPlan with dataPlanFile to use a path to a locally stored data plan if that is more convenient.

For example:

$ mp planning:data-plans:update --dataPlanId=XXXXX --dataPlanFile=/path/to/dataplan/fileTo create a Data Plan Version, simply use mp planning:data-plan-versions:update, --dataPlanVersionFile, and pass a dataPlanId as a secondary flag.

Deleting a Data Plan and Data Plan Versions

To delete a data plan, simply pass the dataPlanId into the delete command.

$ mp planning:data-plans:delete --dataPlanId=XXXXDeleting a Data Plan version is similar, only requiring an additional flag of versionNumber

$ mp planning:data-plans:delete --dataPlanId=XXXXX --versionNumber=XXValidating against a Data Plan

Validating an Event Batch is a more complex task and the CLI provides flexibility by allowing validation to be done either locally or via the server, depending on your needs. Running a validation locally does not make a request on our servers, and as such is faster and ideal for a CI/CD environment.

$ mp planning:batches:validate --batch="{ // batch as string}" --dataPlanVersion="{ // data plan version as string }"This will locally run your batch against a data plan version and return any validation results to the console.

This command also supports an --outfile flag that will write the validation results to a file in your local directory, in case you’d like to save the results for future use.

Both batch and dataPlanVersion support a batchFile and dataPlanVersionFile parameter (as well as dataPlan/dataPlanFile and versionNumber) options for less verbose validation commands.

Was this page helpful?

- Last Updated: July 3, 2025